Using the Squid Reverse Proxy to Improve eZ Publish Performance

Squid overview

Squid is an application that caches HTTP requests, subsequently serving the same request more quickly. When requests are served from the cache, the webserver does not have to expend resources to regenerate pages, transfer images, and so on. Squid stores XHTML pages, images, CSS files, JavaScript and other data transmitted over HTTP via the proxy as long as the data is configured as cacheable by the application (eZ Publish in this case). With eZ Publish you can cache the following:

- Complete pages by using the header override features (available in eZ Publish 3.8 and greater)

- Static files such as CSS and JavaScript

- Database content such as images, media and binary files

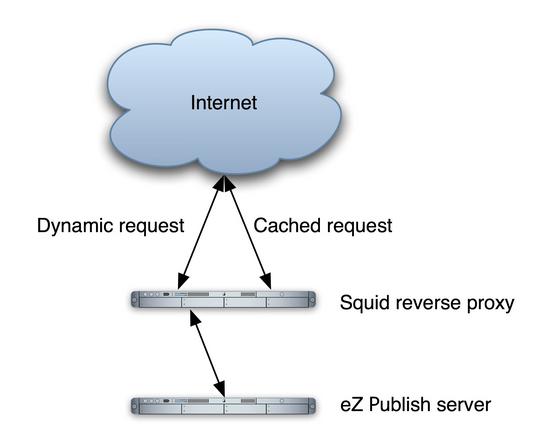

The diagram below shows an example architecture using Squid.

An example architecture using Squid

Squid resides on a separate server in front of the eZ Publish webserver(s). The Squid server caches the user HTTP requests, speeding up the serving of pages and files and also lowering the load on the eZ Publish server. With Squid, many requests are only sent through to the webserver the first time. Subsequently, they are intercepted and served by Squid until the requested item's cache expires. (Cache expiry will be discussed later in this article.) This frees up resources for unique or dynamic requests that need processing by the webserver. If you are running eZ Publish in a clustered environment, Squid should reside in front of the load balancer. This is the simplest setup and allows you to cache all servers at the same time.

Squid installation

To install Squid on a Linux system, use the apt-get application. You should also install the squidclient command line tool. Squid is also available in other package formats and on most other platforms. See the About Squid FAQ for a list of supported operating systems.

The following example shows the commands used to install Squid on Debian-based Linux systems using apt-get.

# apt-get install squid # apt-get install squid-client

To start Squid, run the following command:

# /etc/init.d/squid start

Squid configuration

After installing Squid, you must modify the configuration file to suit your installation. The configuration file shown below is the one used in the eZ performance lab.

# cat /etc/squid/squid.conf # eZ Publish cluster cache acl all src 0.0.0.0/0.0.0.0 visible_hostname babylonproxy.ezcluster.no icp_access allow all # Make Squid listen on port 80 http_port 80 # tell squid to contact the real webserver httpd_accel_host 192.168.0.3 httpd_accel_port 80 httpd_accel_uses_host_header off # Disable proxy support httpd_accel_with_proxy off http_access allow all

The significant settings in this file are http_port, http_accel_host and http_accel_port. The first defines the port on the Squid server that should be used. The latter two define the server and port where eZ Publish is installed.

If Squid is running on the same server as the eZ Publish installation, you would run Apache on, for example, port 81 and Squid on port 80. You would then change the http_accel_port value to 81.

Security

By default, Squid can act as both a proxy and a reverse proxy accelerator. You normally do not want the Squid server to be used as a proxy, as users would thus be able to set Squid to access other servers on the internet on the users' behalf. This would slow down the Squid server with unnecessary requests and create a security risk.

We only want Squid to allow access to the webservers (in our case, the eZ Publish webserver) we want to accelerate. The setting below ensures this.

httpd_accel_with_proxy off

In order for Squid to be able to cache requests, eZ Publish must return headers that identify the request as cacheable. In most cases, eZ Publish does this automatically; however, you can finetune this by overriding the default headers.

The headers below show a typical cacheable request returned from eZ Publish. We have disabled the "Pragma" and "Cache Control" headers, which control caching behavior. The "Expires" header defines the period of time during which the item can be cached, before the request needs to be sent through to the webserver again. In this case, the expiry time is set to ten minutes.

header("Pragma: ");

header("Cache Control: ");

header("Expires: ". gmdate('D, d M Y H:i:s', time() + 600) . "GMT");

eZ Publish settings

Here is an eZ Publish configuration to generate headers specifying cacheable requests for Squid:

settings/siteaccess/PUBLIC_SITE_ACCESS/site.ini.append.php [HTTPHeaderSettings] # Enable/disable custom HTTP header data. CustomHeader=enabled # Cache-Control values are set directly Cache-Control[] Cache-Control[/]= # Expires specifies time offset compared to current time # Default expired 2 hours ago ( no caching ) Expires[] Expires[/]=300 # Pragma values are set directly Pragma[] Pragma[/]=

Squid keeps an item in the cache until it has expired. There are three things that can expire a cached object:

- Timeout based on the "Expires" value in the HTTP header

- Manual browser refresh (for example, hitting the reload button)

- Forced cache clearing with squidclient purge

To force cache clearing of a specific item you can use the squidclient command line tool as shown below.

squidclient -m PURGE 'URL'

When testing Squid, remember that browsers send no-cache headers when the reload button is clicked. The cache has thus expired. For proper testing, you should use a benchmarking tool such as ApacheBench or Siege.

We have now looked at what needs to be done in order to install and configure Squid for use with eZ Publish. Using Squid can improve performance drastically, especially under high-load situations.