eZ Publish Performance Optimization Part 1 of 3: Introduction and Benchmarking

This article is the first in a three-part series on eZ Publish performance. Performance optimization involves assessing existing site capabilities, identifying problems and implementing solutions. This first article will introduce basic performance terminology and discuss tools to benchmark your site's performance.

The latter two articles will outline eZ Publish debugging and provide practical solutions for optimizing performance.

Throughout this series, we will focus on configuration and setup changes that can be done within eZ Publish. This does not include the hosting environment, typically consisting of Apache, MySQL, PHP and APC, which is also important for eZ Publish performance. See the related articles about the server environment, MySQL, clustering and server architecture for information about tuning the hosting environment.

Your website project's scale determines the architectural, hardware, configuration and setup needs. Below, we have listed some values that must be collected or estimated at the beginning of a project in order to set some performance-related goals to use as benchmarks. Obviously, a simple informational site for a small business will have different requirements than a dynamic, interactive website for a large corporation.

Statistics needed for creating benchmarks include:

- Number of pages (articles, products -- called "objects" in eZ Publish)

- Number of user accounts in the system

- Average number of pageviews per day

- Peak number of pageviews per day

- Concurrent visitors

- Content publishing rate -- how many objects are published per day

Requirements

Once you establish the size of the project, you need to compare this with customer requirements (or your requirements, if you are the "customer"). There are often requirements about page load time, maximum load time, transfer time and other performance measures. It is important to be clear on the expectations and on what is to be delivered.

Performance terms

Before we can assess a system's capabilities, we must be familiar with some basic terminology. Below is a list of performance terms and definitions.

Performance

Performance is a general term that refers to how fast the server is capable of handling one request. Performance affects every user's experience.

Latency

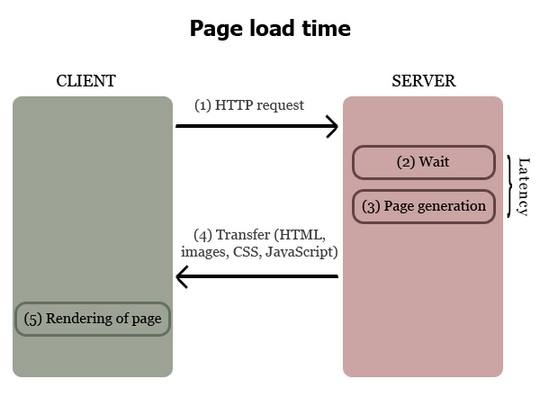

This is the time between when the request is sent to the webserver to the first webserver response, and could include a wait time before page generation has started.

Page generation time

This is the time taken by the server to generate a dynamic page and prepare it for transfer to the user.

Page transfer time

This is the time taken to transfer the page from the server to the user.

Page load time

This is the total time from when the request is sent from the client until the page is completely displayed in the client's browser. This includes the transfer of HTML, CSS, images and any other elements needed from the server to display the complete page. Typically, this also includes the actual rendering time by the visitor's browser.

This is the total wait time that the user experiences when accessing a page. If this is slow, there are several possible causes:

- Page generation time on the server

- Element transfer time

- Rendering time in the browser

The components of page load time

Concurrency

Concurrency is a term that defines how many parallel requests can be handled by the server at the same time.

Scalability

Scalability defines how well the system can handle an increase in concurrent visitors while maintaining a specified level of performance.

Benchmarking

This is the practice of comparing current performance levels to a set of requirements or standards.

In order to make performance improvements, you need to first identify the performance capabilities of your system.

Benchmarking

ApacheBench ("ab"), http_load and siege are three useful benchmarking tools that are run from the client or server command line to test webserver performance. Selenium IDE is a benchmarking tool that runs in a browser, described in a previous article about Selenium.

In this article, we will outline the usage of ab, siege and http_load. These tools can be run on both the server or the client. Running the tools on the client can help to identify network delays, while running the tools on the server will eliminate such delays from the test results.

Our example tests in this article will use two parameters: concurrency and number of requests. "Hammering" is the term used to described repeated requests during testing. It is important to know the type of architecture you are running on the server when choosing concurrency levels.

For example, a Pentium 4 CPU uses hyperthreading technology, which enables it to run two processes in parallel. This means that two concurrent requests are almost twice as fast as one request. If you have a server with more than one P4 CPU, you would multiply the number of CPUs by two. In our case we used dual Xeon servers and ran a concurrency of 4 or more to get the maximum performance from the server.

At the most basic level, we are interested in discovering the page generation and transfer times of different pages. This is usually expressed in pageviews per second or other similar results such as percentage of requests served within a certain time.

Monitoring tools

When you are running benchmarking tests, it is useful to use a monitoring tool such as top or vmstat to display continuous information about server parameters.

Check that the load on the I/O (that is, transfer to and from the CPU and memory core) is not limiting performance. When benchmarking an eZ Publish site that is properly configured, you should be limited by the CPU and not the I/O. You should see close to 100% CPU usage when hammering the server. If the I/O shows a high load, this might indicate that you are out of memory, and that the memory is being swapped to the hard drive. Ideally the load should be steady at approximately 1-2, which means that there are only one or two processes waiting for the CPU.

If your application uses the MySQL database heavily, you should monitor the health of MySQL while doing hammering. You can use mtop to monitor what MySQL is doing and identify slow queries.

We will not detail the top, vmstat and mtop commands in this article, but you can find information about them on their respective webpages or by running the "man" command.

ApacheBench, or "ab", is a small tool bundled with the Apache webserver that is used to check the maximum performance of your webserver or web application. It can only benchmark one URL at a time and does not include images, CSS files or other items included on a webpage. It basically hammers the URL and records how much time the webserver spends on serving the pages.

Concurrency is specified by the parameter -c and number of requests is specified with -n.

Examples

Here is an ab example with eZ Publish running on one server. We fetch 20 requests with concurrency ranging from 1-4. As you will see from the table below, the server scales well with 4 concurrent requests. This is also the maximum performance delivered from this server.

The commands used are as follows:

ab -c 1 -n 20 http://olympia.ezcluster.ez.no/base/ ab -c 2 -n 20 http://olympia.ezcluster.ez.no/base/ ab -c 3 -n 20 http://olympia.ezcluster.ez.no/base/ ab -c 4 -n 20 http://olympia.ezcluster.ez.no/base/

Below is a very simple table that you could create from ab output in order to demonstrate the server's ability to deal with an increasing number of concurrent requests

| Concurrent requests | ||||

|---|---|---|---|---|

| 1 | 2 | 3 | 4 | |

| Pages per second | 14.0 | 24.8 | 31.0 | 35.5 |

| Relative performance | 100% | 177% | 221% | 254% |

In this case, the theoretical maximum number of pageviews for this server is about 3 million (35.5*60*60*24) per day.

Here is another sample command and ab's resulting output:

$ ab -c 5 -n 100 http://mycachedsite.example.com/

This is ApacheBench, Version 1.3d <$Revision: 1.73 $> apache-1.3

Copyright (c) 1996 Adam Twiss, Zeus Technology Ltd, http://www.zeustech.net/

Copyright (c) 1998-2002 The Apache Software Foundation, http://www.apache.org/

Benchmarking db.no (be patient).....done

Server Software: Apache/1.3.33

Server Hostname: db.no

Server Port: 80

Document Path: /

Document Length: 228 bytes

Concurrency Level: 5

Time taken for tests: 1.424 seconds

Complete requests: 100

Failed requests: 0

Broken pipe errors: 0

Non-2xx responses: 100

Total transferred: 42200 bytes

HTML transferred: 22800 bytes

Requests per second: 70.22 [#/sec] (mean)

Time per request: 71.20 [ms] (mean)

Time per request: 14.24 [ms] (mean, across all concurrent requests)

Transfer rate: 29.63 [Kbytes/sec] received

Connnection Times (ms)

min mean[+/-sd] median max

Connect: 13 31 5.5 31 44

Processing: 32 39 26.6 36 294

Waiting: 19 39 26.6 36 294

Total: 32 70 27.6 70 327

Percentage of the requests served within a certain time (ms)

50% 70

66% 71

75% 71

80% 72

90% 75

95% 83

98% 109

99% 327

100% 327 (last request)What it is not

Ab is not a tool you can use to generate a natural load on the server. In such a case, you should use a tool like siege (described later in this article).

Further help

You can also use ab -help to see a list of all parameters ab can take. You can for example have ab log into a website with htauthentication.

http_load is a multiprocessing HTTP test client. It is capable of generating a significant amount of HTTP traffic even on modest hardware. http_load runs multiple HTTP fetches in parallel, to test the throughput of a web server. This shows how many connections a server can handle - that is, how many requests can be served in a given time period. http_load can emulate a large number of low-bandwidth connections, supporting emulated bandwidth throttling (that is, limiting the rate of data transfer). http_load runs in a single process, so the client machine will not be slowed.

Installation

You can download the http_load source from the project site.

You can compile http_load from the source. Before you install http_load, make sure that your system has standard development tools such as autoconf and libtool installed. Access to the root account might be needed as well.

The basic commands you must execute to compile and install http_load from the source are:

shell> su - shell> cd /usr/local/src shell> gunzip < /PATH/TO/http_load-version.tar.gz | tar xvf - shell> http_load-version shell> make shell> make install

An http_load binary will be installed under /usr/local/bin/. If you install http_load as a non-root user, make sure that you have write permission to that folder.

Once http_load is installed in your system, you can access the manual page by executing:

shell> man http_load

Testing

http_load requires at least 3 parameters:

-

One start specifier, either -parallel or -rate

-parallel tells http_load to make the specified number of concurrent requests.

-rate tells http_load to start the specified number of new connections each second. If you use the -rate start specifier, you can specify a -jitter flag parameter that tells http_load to vary the rate randomly by about 10%. -

One end specifier, either -fetches or -seconds

-fetches tells http_load to quit when the specified number of fetches have been completed.-seconds tells http_load to quit after the specified number of seconds have elapsed. - A file containing a list of URLs to fetch

- The urls parameter specifies a text file containing a list of URLs, one per line. The requested URLs are chosen randomly from this file.

Here is an example test showing the output results. This test runs for ten seconds, with five parallel requests:

Here is an example test showing the output results. This test runs for ten seconds, with five parallel requests:

$ http_load -parallel 5 -seconds 10 urls.txt 185 fetches, 5 max parallel, 10545 bytes, in 10.0084 seconds 57 mean bytes/connection 18.4845 fetches/sec, 1053.62 bytes/sec msecs/connect: 0.211719 mean, 12.859 max, 0.044 min msecs/first-response: 267.173 mean, 1465.58 max, 50.509 min HTTP response codes: code 200 -- 185

As you can see, the resulting performance is about 18.5 fetches (or pageviews) per second. To clearly assess existing performance, you should load the server heavily and run the test for longer and varying periods of time.

Siege is useful for checking the performance of a web application in an environment similar to what visitors encounter. While most tools find the maximum number of pages served per second, siege can also simulate random wait intervals, thus mimicking real user interactions where requests are not generated at regular intervals.

You can set up a script of different URLs to be benchmarked. Siege even supports GET and POST as well as cookies, so you can create sessions that emulate logged-in users.

Basic testing

The most basic siege test is specifying the concurrency level and the number of times to repeat the test. The command below shows how you can run siege with a concurrency level of 4 and repeat the test 10 times for the given URL.

# siege -b -c 4 -r 10 -u http://192.168.0.3/base/lots_of_content/parturient_id Transactions: 40 hits Availability: 100.00 % Elapsed time: 1.04 secs Data transferred: 1196640 bytes Response time: 0.10 secs Transaction rate: 38.46 trans/sec Throughput: 1150615.43 bytes/sec Concurrency: 3.78 Successful transactions: 40 Failed transactions: 0 Longest transaction: 0.11 Shortest transaction: 0.08

For benchmarking, always use the -b or -benchmark parameter. If this is not specified, siege will insert a random delay to simulate actual user traffic.

You can also test for a given time period by using the -t parameter instead of -r (repeats). This specifies that the testing should be done for x number of seconds, minutes or hours (S, M, H).

For example, you can run the same test above but for 10 seconds:

# siege -b -c 4 -t 10S -u http://192.168.0.3/base/lots_of_content/parturint_id Transactions: 411 hits Availability: 100.00 % Elapsed time: 10.18 secs Data transferred: 12295476 bytes Response time: 0.10 secs Transaction rate: 40.37 trans/sec Throughput: 1207807.04 bytes/sec Concurrency: 3.96 Successful transactions: 411 Failed transactions: 0 Longest transaction: 0.11 Shortest transaction: 0.08

Benchmark scripts

For more realistic tests of webserver traffic, use the urls.txt file, which can, for example, be stored in the root of your home folder. This file is a collection of URLs that will be used for testing. To create a test where you fetch 10 different URLs, you can use the urls.txt file as shown below. (Note that the first line is not part of the contents of the file.)

# cat urls.txt http://192.168.0.4/base/lots_of_content/venenatis_cras http://192.168.0.4/base/lots_of_content/egestas_commodo http://192.168.0.4/base/lots_of_content/parturient_id http://192.168.0.4/base/lots_of_content/curae http://192.168.0.4/base/lots_of_content/ut http://192.168.0.4/base/lots_of_content/mattis_facilisis http://192.168.0.4/base/lots_of_content/torquent_ante http://192.168.0.4/base/lots_of_content/quam_urna http://192.168.0.4/base/lots_of_content/parturient_aptent http://192.168.0.4/base/lots_of_content/quis

siege can then be run as follows:

siege -b -r 10 -c 4 Transactions: 40 hits Availability: 100.00 % Elapsed time: 1.45 secs Data transferred: 1555332 bytes Response time: 0.14 secs Transaction rate: 27.59 trans/sec Throughput: 1072642.72 bytes/sec Concurrency: 3.83 Successful transactions: 40 Failed transactions: 0 Longest transaction: 0.15 Shortest transaction: 0.11

URL harvesting with sproxy

Sproxy is a handy utility that harvests a list of URLs that can be used for benchmarking. It includes all files sent from the server, including images and CSS files.

After installing and starting sproxy, it will start a listening proxy on port 9001 on your computer. In your web browser, choose localhost and port 9001 for your proxy settings. Sproxy will then log all your web activity in the file urls.txt in your home folder.

The output of sproxy looks like this:

$ /usr/local/bin/sproxy SPROXY v1.01 listening on port 9001 ...appending HTTP requests to: /Users/bf/urls.txt ...default connection timeout: 120 seconds

The recorded values in urls.txt might look something like this:

http://10.0.2.83/base/forums/small_talk http://10.0.2.83/design/base/images/sticky-16x16-icon.gif http://10.0.2.83/base/user/login http://10.0.2.83/base/user/login POST Login=admin&Password=publish&LoginButton=Login&RedirectURI=

Then you can simply run siege as normal, using this newly generated URLs file.

In this article we have introduced some key performance terminology and discussed three benchmarking tools.

The three benchmarking tools mentioned all have unique benefits. Ab is the simplest and comes pre-installed with Apache. Both siege and http_load handle multiple URLs. Siege can be used with sproxy to generate and test a URL list mimicking a user's interaction with the site, while http_load does a good job at measuring latency.

You can use various performance statistics to finetune your eZ Publish site. In the next article in this series, we will look at debugging with eZ Publish; the third and final article in the series will describe concrete solutions to improve your eZ Publish site's performance.